Devin Pham

I work at the intersection of robotics and machine learning, where I focus on building perception systems that help autonomous mobile robots understand and interact with the world around them. I also develop full-stack web applications that interface with robots to enable real-time monitoring, control, and visualization of their behavior and data.

If you are interested in my work, feel free to reach out to me!

Projects

Advancing robotics through the power of data science

Robotics

Camera-Based Human Tracking and Following Algorithm for Mobile Robots

Developed a camera-based human tracking pipeline to enable the safe and efficient relocation of airport robots. This algorithm detects and tracks human movement in real time using Ultralytic's YOLOv11 model and a 3D depth camera to create a dynamic path plan.

ROS Python HTML JS OpenCV Gazebo Computer Vision Deep Learning YOLO HRI CUDA

Cloud-Hosted Fleet Monitoring and Control App for Mobile Robots

Built a comprehensive cloud-based platform for real-time fleet monitoring, control, and analytics with web and mobile interfaces.

React Node.js AWS

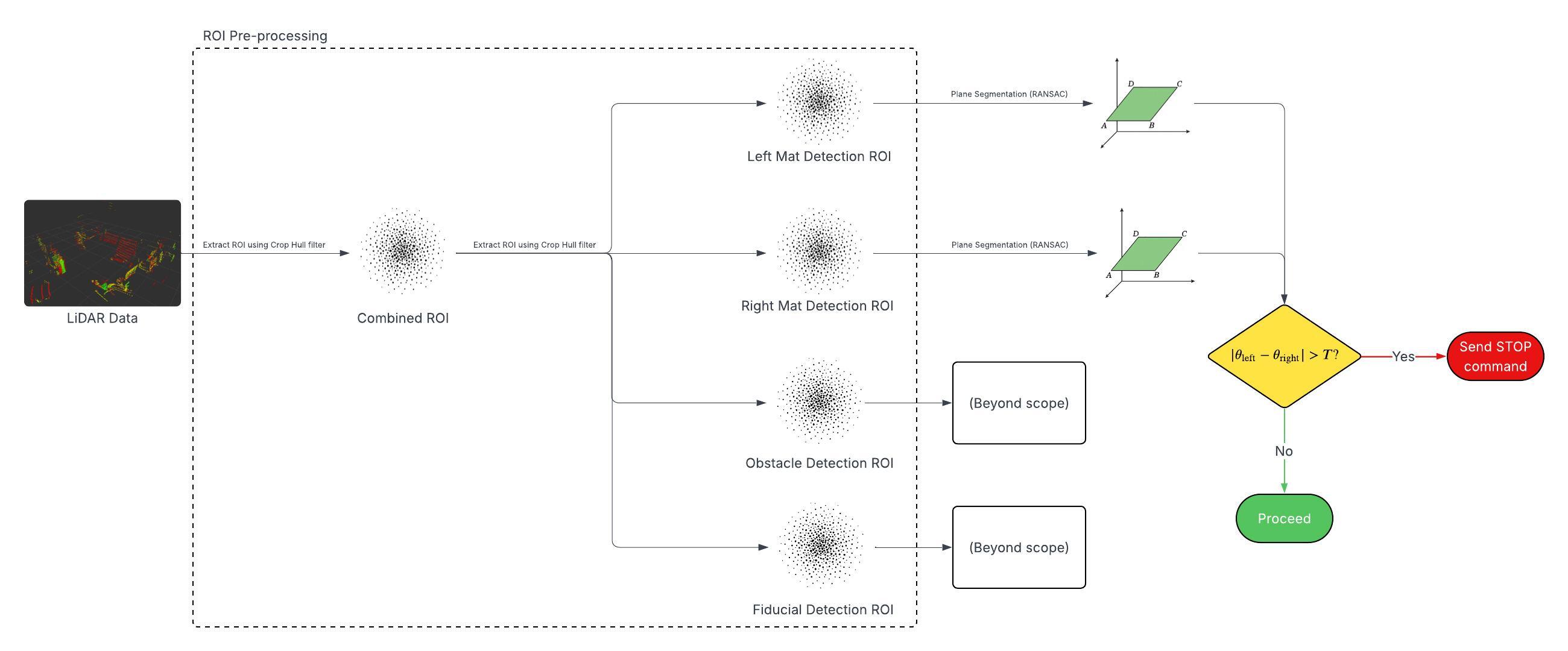

LiDAR-Based Detection of Planar Surfaces for Robotic Process Monitoring

Developed a LiDAR-based system for detecting and analyzing planar surfaces in industrial environments to enable precise robotic process monitoring and quality control.

ROS Python C++ LiDAR Point Cloud PCL RANSAC Industrial Automation

Camera-Based Lane Detection Algorithm for Autonomous Vehicles

Implemented a robust lane detection system using computer vision techniques to identify road lanes and provide navigation guidance for autonomous vehicle systems.

Python OpenCV Computer Vision Edge Detection Hough Transform Autonomous Vehicles Machine LearningCurriculum Vitae

Education, Experience & Skills

Overview

Work

Robotics Engineer

Essential Aero

Enhanced the safety of state-of-the-art AGVs used for airport logistics by optimizing LiDAR and radar point cloud data using C++ and PCL

- Developed a YOLOv11-based human tracking pipeline for seamless relocation of robots

- Developed a YOLOv11-based aircraft detection pipeline to ensure AGVs navigate safely in a busy airport environment

- Built and optimized CUDA-enabled Dockerfiles for deploying GPU-accelerated deep learning models in production

- Created applications to control and configure AGVs using HTML, JavaScript, and CSS

- Simulated and validated robotic applications in a simulated environment using Gazebo Sim

- Recorded ROS2 data published by robots to be visualized and analyzed using RVIZ2

- Utilized multi-threading to optimize a ROS2 data parser resulting in an 800% increase in efficiency

- Conducted extensive field testing and reported issues on Jira and Epsilon3

- Collaborated with engineers using Git workflows to resolve merge conflicts, and conduct code reviews

- Leveraged Cursor AI and Claude to accelerate software development by generating, refactoring, and debugging code, improving development speed and code quality

Software Engineer Intern

Genasys Inc.

Streamlined the task of creating bootable USB drives, resulting in a 50% increase in efficiency parameters using company data, resulting in a 10% increase in test accuracy

- Assisted engineers in the development of software by reporting bugs via Jira project management

- Verified the integrity of custom-made test cables by using a Fluke cable tester

- Streamlined cable testing procedures by developing precise and easily replicable testing instructions using Microsoft Office applications

- Incorporated Agile Methodology to log the BOM of cables and instructions used for testing

Software Engineer Intern

Genasys Inc.

Developed a GUI-based program using Python, and NumPy, pandas and TKinter libraries to generate test parameters using company data, resulting in a 10% increase in test accuracy

- Contribute changes to a Bitbucket repository using the Gitflow workflow

- Developed a Windows Forms app using C# that control an Audio Power Board (APB) via USB

- Leveraged Slack to efficiently communicate with cross-continental engineering teams

Education

Bachelor of Science in Data Science

University of California, San Diego

Focus on machine Learning and artificial intelligence. Senior project on "Deep Learning in Autonomous Vehicles"

- Programming & Data Structures

- Probability & Statistics

- Data Management

- Robotics

Technical Skills

Programming Languages

C++, Python, JavaScript, HTML/CSS, C#, SQL, R

Frameworks & Tools

ROS2, Numpy, Pandas, RVIZ2, Gazebo Sim, Roboflow, Docker, CUDA, YOLO, PCL, AWS, RStudio

Other

Agile, Slack, Jira, Git, Bitbucket, Microsoft Office Suite